Effective altruism has gone mainstream. Where does that leave it?

Seven years ago, I was invited to my first EA Global, the flagship conference of the effective altruism movement. I was fascinated by effective altruism (EA), which defines itself as “using evidence and reason to figure out how to benefit others as much as possible,” in large part because of the moral seriousness of its practitioners.

My first piece on EA, from 2013, tracked three people who were “earning to give”: taking high-paying jobs in sectors like tech or finance for the express purpose of giving half or more of their earnings away to highly effective charities, where the money could save lives. I’d met other EAs who made much more modest salaries but still gave a huge share of their income away, or who donated their kidneys to strangers. These were people who were willing to give up a lot — even parts of their own bodies — to help others.

So I agreed to speak at a panel at the conference. Along the way I asked if the organizers could pay for my flight and hotel, as is the norm for journalists invited to speak at events. They replied that they were short on cash — and I immediately felt like an asshole. I had asked the people at the “let’s all give our money to buy the world’s poor malaria bed nets” conference to buy me a plane ticket? Obviously the money should go to bed nets!

Flash forward to 2021. The foundation of Sam Bankman-Fried, the crypto billionaire and dedicated effective altruist donor, announced a program meant to bring fellow EAs to the Bahamas, where his crypto exchange company FTX is headquartered for largely regulatory reasons. The 10-25 accepted applicants would receive funding for travel to and from the Bahamas, housing for “up to 6 months,” and a one-time stipend of $10,000 each. Pennies weren’t exactly being pinched anymore.

It’s safe to say that effective altruism is no longer the small, eclectic club of philosophers, charity researchers, and do-gooders it was just a decade ago. It’s an idea, and group of people, with roughly $26.6 billion in resources behind them, real and growing political power, and an increasing ability to noticeably change the world.

EA, as a subculture, has always been categorized by relentless, sometimes navel-gazing self-criticism and questioning of assumptions, so this development has prompted no small amount of internal consternation. A frequent lament in EA circles these days is that there’s just too much money, and not enough effective causes to spend it on. Bankman-Fried, who got interested in EA as an undergrad at MIT, “earned to give” through crypto trading so hard that he’s now worth about $12.8 billion as of this writing, almost all of which he has said he plans to give away to EA-aligned causes. (Disclosure: Future Perfect, which is partly supported through philanthropic giving, received a project grant from Building a Stronger Future, Bankman-Fried’s philanthropic arm.)

Along with the size of its collective bank account, EA’s priorities have also changed. For a long time, much of the movement’s focus was on “near-termist” goals: reducing poverty or preventable death or factory farming abuses right now, so humans and animals can live better lives in the near-term.

But as the movement has grown richer, it is also increasingly becoming “longtermist.” That means embracing an argument that because so many more humans and other intelligent beings could live in the future than live today, the most important thing for altruistic people to do in the present moment is to ensure that that future comes to be at all by preventing existential risks — and that it’s as good as possible. The impending release of What We Owe to the Future, an anticipated treatise on longtermism by Oxford philosopher and EA co-founder Will MacAskill, is indicative of the shift.

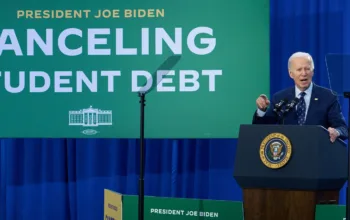

The movement has also become more political — or, rather, its main benefactors have become more political. Bankman-Fried was one of the biggest donors to Joe Biden’s 2020 campaign, as were Cari Tuna and Dustin Moskovitz, the Facebook/Asana billionaires who before Bankman-Fried were by far the dominant financial contributors to EA causes. More recently, Bankman-Fried spent $10 million in an unsuccessful attempt to get Carrick Flynn, a longtime EA activist, elected to Congress from Oregon. Bankman-Fried has said he’ll spend “north of $100 million” on the 2024 elections, spread across a range of races, but partially to prevent Donald Trump from returning to the White House.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23902291/ea_board_secondary_1.jpg)

But his motivations aren’t those of an ordinary Democratic donor — Bankman-Fried told podcast host Jacob Goldstein that fighting Trump was less about promoting Democrats than ensuring “sane governance” in the US, which could have “massive, massive, ripple effects on what the future looks like.” Indeed, Bankman-Fried is somewhat bipartisan in his giving. While the vast majority of his political donations have gone to Democrats, 16 of the 39 candidates endorsed by the Bankman-Fried-funded Guarding Against Pandemics PAC are Republicans as of this writing.

Effective altruism in 2022 is richer, weirder, and wields more political power than effective altruism 10, or even five years ago. It’s changing and gaining in importance at a rapid pace. The changes represent a huge opportunity — and also novel dangers that could threaten the sustainability and health of the movement. More importantly, the changes could either massively expand or massively undermine effective altruism’s ability to improve the broader world.

The origins of effective altruism

The term “effective altruism,” and the movement as a whole, can be traced to a small group of people based at Oxford University about 12 years ago.

In November 2009, two philosophers at the university, Toby Ord and Will MacAskill, started a group called Giving What We Can, which promoted a pledge whose takers commit to donating 10 percent of their income to effective charities every year (several Voxxers, including me, have signed the pledge).

In 2011, MacAskill and Oxford student Ben Todd co-founded a similar group called 80,000 Hours, which meant to complement Giving What We Can’s focus on how to give most effectively with a focus on how to choose careers where one can do a lot of good. Later in 2011, Giving What We Can and 80,000 Hours wanted to incorporate as a formal charity, and needed a name. About 17 people involved in the group, per MacAskill’s recollection, voted on various names, like the “Rational Altruist Community” or the “Evidence-based Charity Association.”

The winner was “Centre for Effective Altruism.” This was the first time the term took on broad usage to refer to this constellation of ideas.

The movement blended a few major intellectual sources. The first, unsurprisingly, came from philosophy. Over decades, Peter Singer and Peter Unger had developed an argument that people in rich countries are morally obligated to donate a large share of their income to help people in poorer countries. Singer memorably analogized declining to donate large shares of your income to charity to letting a child drowning in a pond die because you don’t want to muddy your clothes rescuing him. Hoarding wealth rather than donating it to the world’s poorest, as Unger put it, amounts to “living high and letting die.” Altruism, in other words, wasn’t an option for a good life — it was an obligation.

Ord told me his path toward founding effective altruism began in 2005, when he was completing his BPhil, Oxford’s infamously demanding version of a philosophy master’s. The degree requires that students write six 5,000-word, publication-worthy philosophy papers on pre-assigned topics, each over the course of a few months. One of the topics listed Ord’s year was, “Ought I to forgo some luxury whenever I can thereby enable someone else’s life to be saved?” That led him to Singer and Unger’s work, and soon the question — ought I forgo luxuries? which ones? how much? — began to consume his thoughts.

Then, Ord’s friend Jason Matheny (then a colleague at Oxford, today CEO of the Rand Corporation) pointed him to a project called DCP2. DCP stands for “Disease Control Priorities” and originated with a 1993 report published by the World Bank that sought to measure how many years of life could be saved by various public health projects. Ord was struck by just how vast the difference in cost-effectiveness between the interventions in the report was. “The best interventions studied were about 10,000 times better than the least good ones,” he notes.

It occurred to him that if residents of rich countries are morally obligated to help residents of less wealthy ones, they might be equally obligated to find the most cost-effective ways to help. Spending $50,000 on the most efficient project saved 10 times as many life-years as spending $50 million on the least efficient project would. Directing resources toward the former, then, would vastly increase the amount of good that rich-world donors could do. It’s not enough merely for EAs to give — they must give effectively.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23923899/bulb_break.png)

Ord and his friends at Oxford weren’t the only ones obsessing over cost-effectiveness. Over in New York, an organization called GiveWell was taking shape. Founded in 2007 by Holden Karnofsky and Elie Hassenfeld, both alums of the eccentric hedge fund Bridgewater Associates, the group sought to identify the most cost-effective giving opportunities for individual donors. At the time, such a service was unheard of — charity evaluators at that point, like Charity Navigator, focused more on ensuring that nonprofits were transparent and spent little on overhead. By making judgments about which nonprofits to give to — a dollar to the global poor was far better than, say, a museum — GiveWell ushered in a sea change in charity evaluation.

Those opportunities were overwhelmingly found outside developed countries, primarily in global health. By 2011, the group had settled on recommending international global health charities focused on sub-Saharan Africa.

“Even the lowest-income people in the U.S. have (generally speaking) far greater material wealth and living standards than the developing-world poor,” the group explains today. “We haven’t found any US poverty-targeting intervention that compares favorably to our international priority programs” in terms of quality of evidence or cost-effectiveness. If the First Commandment of EA is to give, and the Second Commandment is to do so effectively, the Third Commandment is to do so where the problem is tractable, meaning that it’s actually possible to change the underlying problem by devoting more time and resources to it. And as recent massive improvements in life expectancy suggest, global health is highly tractable.

Before long, it was clear that Ord and his friends in Oxford were doing something very similar to what Hassenfeld and Karnofsky were doing in Brooklyn, and the two groups began talking (and, of course, digging into each other’s cost-effectiveness analyses, which in EA is often the same thing). That connection would prove immensely important to effective altruism’s first surge in funding.

“YOLO #sendit”

In 2011, the GiveWell team made two very important new friends: Cari Tuna and Dustin Moskovitz.

The latter was a co-founder of Facebook; today he runs the productivity software company Asana. He and his wife Tuna, a retired journalist, command some $13.8 billion as of this writing, and they intend to give almost all of it away to highly effective charities. As of July 2022, their foundation has given out over $1.7 billion in publicly listed grants.

After connecting with GiveWell, they wound up using the organization as a home base to develop what is now Open Philanthropy, a spinoff group whose primary task is finding the most effective recipients for Tuna and Moskovitz’s fortune. Because of the vastness of that fortune, Open Phil’s comparatively long history (relative to, say, FTX Future Fund), and the detail and rigor of its research reports on areas it’s considering funding, the group has become by far the most powerful single entity in the EA world.

Tuna and Moskovitz were the first tech fortune in EA, but they would not be the last. Bankman-Fried, the child of two “utilitarian leaning” Stanford Law professors, embraced EA ideas as an undergraduate at MIT, and decided to “earn to give.”

After graduation in 2014, he went to a small firm called Jane Street Capital, then founded the trading firm Alameda Research and later FTX, an exchange for buying and selling crypto and crypto-related assets, like futures. By 2021, FTX was valued at $18 billion, making the then-29-year-old a billionaire many times over. He has promised multiple times to give almost that entire fortune away.

The steady stream of billionaires embracing EA has left it in an odd situation: It has a lot of money, and substantial uncertainty about where to put it all, uncertainty which tends to grow rather than ebb with the movement’s fortunes. In July 2021, Ben Todd, who co-founded and runs 80,000 Hours, estimated that the movement had, very roughly, $46 billion at its disposal, an amount that had grown by 37 percent a year since 2015. And only 1 percent of that was being spent every year.

Moreover, the sudden wealth altered the role longtime, but less wealthy, EAs play in the movement. Traditionally, a key role of many EAs was donating to maximize funding to effective causes. Jeff Kaufman, one of the EAs engaged in earning-to-give who I profiled back in 2013, until recently worked as a software engineer at Google. In 2021, he and his wife Julia Wise (an even bigger figure in EA as the full-time community liaison for the Center for Effective Altruism) earned $782,158 and donated $400,000 (they make all these numbers public for transparency).

That’s hugely admirable, and much, much more than I donated last year. But that same year, Open Phil distributed over $440 million (actually over $480 million due to late grants, a spokesperson told me). Tuna and Moskovitz alone had the funding capacity of over a thousand less-wealthy EAs, even high-profile EAs dedicated to the movement who worked at competitive, six-figure jobs. Earlier this year, Kaufman announced he was leaving Google, and opting out of “earning to give” as a strategy, to do direct work for the Nucleic Acid Observatory, a group that seeks to use wastewater samples to detect future pandemics early. Part of his reasoning, he wrote on his blog, was that “There is substantially more funding available within effective altruism, and so the importance of earning to give has continued to decrease relative to doing things that aren’t mediated by donations.”

That said, the new funding comes with a lot of uncertainty and risk attached. Given how exposed EA is to the financial fortunes of a handful of wealthy individuals, swings in the markets can greatly affect the movement’s short-term funding conditions.

In June 2022, the crypto market crashed, and Bankman-Fried’s net worth, as estimated by Bloomberg, crashed with it. He peaked at $25.9 billion on March 29, and as of June 30 was down more than two-thirds to $8.1 billion; it’s since rebounded to $12.8 billion. That’s obviously nothing to sneeze at, and his standard of living isn’t affected at all. (Bankman-Fried is the kind of vegan billionaire known for eating frozen Beyond Burgers, driving a Corolla , and sleeping on a bean bag chair.) But you don’t need to have Bankman-Fried’s math skills to know that $25.9 billion can do a lot more good than $12.8 billion.

Tuna and Moskovitz, for their part, still hold much of their wealth in Facebook stock, which has been sliding for months. Moskovitz’s Bloomberg-estimated net worth peaked at $29 billion last year. Today it stands at $13.8 billion. “I’ve discovered ways of losing money I never even know I had in me,” he jokingly tweeted on June 19.

But markets change fast, crypto could surge again, and in any case Moskovitz and Bankman-Fried’s combined net worth of $26.5 billion is still a lot of money, especially in philanthropic terms. The Ford Foundation, one of America’s longest-running and most prominent philanthropies, is only worth $17.4 billion. EA now commands one of the largest financial arsenals in all of US philanthropy. And the sheer bounty of funding is leading to a frantic search for places to put it.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23904934/ea_board_secondary_2.jpg)

One option for that bounty is to look to the future — the far future. In February 2022, the FTX Foundation, a philanthropic entity founded chiefly by Bankman-Fried, along with his FTX colleagues Gary Wang and Nishad Singh and his Alameda colleague Caroline Ellison, announced its “Future Fund”: a project meant to donate money to “improve humanity’s long-term prospects” through the “safe development of artificial intelligence, reducing catastrophic biorisk, improving institutions, economic growth,” and more.

The fund announced it was looking to spend at least $100 million in 2022 alone, and it already has: On June 30, barely more than four months after the fund’s launch, it stated that had already given out $132 million. Giving money out that fast is hard. Doing so required giving in big quantities ($109 million was spent on grants over $500,000 each), as well as unusual methods like “regranting” — giving over 100 individuals trusted by the Future Fund budgets of hundreds of thousands or even millions of dollars each, and letting them distribute it as they like.

The rush of money led to something of a gold-rush vibe in the EA world, enough so that Nick Beckstead, CEO of the FTX Foundation and a longtime grant-maker for Open Philanthropy, posted an update in May clarifying the group’s methods. “Some people seem to think that our procedure for approving grants is roughly ‘YOLO #sendit,’ he wrote. “This impression isn’t accurate.”

But that impression nonetheless led to significant soul-searching in the EA community. The second most popular post ever on the EA Forum, the highly active message board where EAs share ideas in minute detail, is grimly titled, “Free-spending EA might be a big problem for optics and epistemics.” Author George Rosenfeld, a founder of the charitable fundraising group Raise, worried that the big surge in EA funding could lead to free-spending habits that alter the movement’s culture — and damage its reputation by making it look like EAs are using billionaires’ money to fund a cushy lifestyle for themselves, rather than sacrificing themselves to help others.

Rosenfeld’s is the second most popular post on the EA Forum. The most popular post is a partial response to him on the same topic by Will MacAskill, one of EA’s founders. MacAskill is now deeply involved in helping decide where the funding goes. Not only is he the movement’s leading intellectual, he’s on staff at the FTX Future Fund and an advisor at the EA grant-maker Longview Philanthropy.

He began, appropriately: “Well, things have gotten weird, haven’t they?”

The shift to longtermism

Comparing charities fighting global poverty is really hard. But it’s also, in a way, EA-on-easy-mode. You can actually run experiments and see if distributing bed nets saves lives (it does, by the way). The outcomes of interest are relatively short-term and the interventions evaluated can be rigorously tested, with little chance that giving will do more harm than good.

Hard mode comes in when you expand the group of people you’re aiming to help from humans alive right now to include humans (and other animals) alive thousands or millions of years from now.

From 2015 to the present, Open Philanthropy distributed over $480 million to causes it considers related to “longtermism.” All $132 million given to date by the FTX Future Fund is, at least in theory, meant to promote longtermist ideas and goals.

Which raises an obvious question: What the fuck is longtermism?

The basic idea is simple: We could be at the very, very start of human history. Homo sapiens emerged some 200,000-300,000 years ago. If we destroy ourselves now, through nuclear war or climate change or a mass pandemic or out-of-control AI, or fail to prevent a natural existential catastrophe, those 300,000 years could be it.

But if we don’t destroy ourselves, they could just be the beginning. Typical mammal species last 1 million years — and some last much longer. Economist Max Roser at Our World in Data has estimated that if (as the UN expects) the world population stabilizes at 11 billion, greater wealth and nutrition lead average life expectancy to rise to 88, and humanity lasts another 800,000 years (in line with other mammals), there could be 100 trillion potential people in humanity’s future.

By contrast, only about 117 billion humans have ever lived, according to calculations by demographers Toshiko Kaneda and Carl Haub. In other words, if we stay alive for the duration of a typical mammalian species’ tenure on Earth, that means 99.9 percent of the humans who will ever live have yet to live.

And those people, obviously, have virtually no voice in our current society, no vote for Congress or president, no union and no lobbyist. Effective altruists love finding causes that are important and neglected: What could be more important, and more neglected, than the trillions of intelligent beings in humanity’s future?

In 1984, Oxford philosopher Derek Parfit published his classic book on ethics, Reasons and Persons, which ended with a meditation on nuclear war. He asked readers to consider three scenarios:

- Peace.

- A nuclear war that kills 99 percent of the world’s existing population.

- A nuclear war that kills 100 percent.

Obviously 2 and 3 are worse than 1. But Parfit argued that the difference between 1 and 2 paled in comparison to the difference between 2 and 3. “Civilization began only a few thousand years ago,” he noted, “If we do not destroy mankind, these few thousand years may be only a tiny fraction of the whole of civilized human history.” Scenario 3 isn’t just worse than 2, it’s dramatically worse, because by killing off the final 1 percent of humanity, scenario 3 destroys humanity’s whole future.

This line of thinking has led EAs to foreground existential threats as an especially consequential cause area. Even before Covid-19, EAs were early in being deeply concerned about the risk of a global pandemic, especially a human-made one coming about due to ever-cheaper biotech tools like CRISPR, which could be far worse than anything nature can cook up. Open Philanthropy spent over $65 million on the issue, including seven- and eight-figure grants to the Johns Hopkins Center for Health Security and the Nuclear Threat Initiative’s biodefense team, before 2020. It’s added another $70 million since. More recently, Bankman-Fried has funded a group led by his brother, Gabe, called Guarding Against Pandemics, which lobbies Congress to fund future pandemic prevention more aggressively.

Nuclear war has gotten some attention too: Longview Philanthropy, an EA-aligned grant-maker supported by both Open Philanthropy and FTX, recently hired Carl Robichaud, a longtime nuclear policy grant-maker, partly in reaction to more traditional donors like the MacArthur Foundation pulling back from trying to prevent nuclear war.

But it is AI that has been a dominant focus in EA over the last decade. In part this reflects the very real belief among many AI researchers that human-level AI could be coming soon — and could be a threat to humanity.

This is in no way a universal belief, but it’s a common enough one to be worrisome. A poll this year found that leading AI researchers put around 50-50 odds on AI surpassing humans “in all tasks” by 2059 — and that was before some of the biggest strides in recent AI research over the last five years. I will be 71 years old in 2061. It’s not even the long-term future; it’s within my expected lifetime. If you really believe superintelligent, perhaps impossible-to-control machines are coming in your lifetime, it makes sense to panic and spend big.

That said, the AI argument strikes many outside EA as deeply wrong-headed, even offensive. If you care so much about the long term, why focus on this when climate change is actually happening right now? And why care so much about the long term when there is still desperate poverty around the world? The most vociferous critics see the longtermist argument as a con, an excuse to do interesting computer science research rather than work directly in the Global South to solve actual people’s problems. The more temperate see longtermism as dangerously alienating effective altruists from the day-to-day practice of helping others.

I know this because I used to be one of these critics. I think, in retrospect, I was wrong, and I was wrong for a silly reason: I thought the idea of a super-intelligent AI was ridiculous, that these kind of nerdy charity folks had read too much sci-fi and were fantasizing wildly.

I don’t think that anymore. The pace of improvement in AI has gotten too rapid to ignore, and the damage that even dumb AI systems can do, when given too much societal control, is extreme. But I empathize deeply with people who have the reaction I did in 2015: who look at EA and see people who talked themselves out of giving money to poor people and into giving money to software engineers.

Moreover, while I buy the argument that AI safety is an urgent, important problem, I have much less faith that anyone has a tractable strategy for addressing it. (I’m not alone in that uncertainty — in a podcast interview with 80,000 Hours, Bankman-Fried said of AI risk, “I think it’s super important and I also don’t feel extremely confident on what the right thing to do is.”)

That, on its own, might not be a reason for inaction: If you have no reliable way to address a problem you really want to address, it sometimes makes sense to experiment and fund a bunch of different approaches in hopes that one of them will work. This is what funders like Open Phil have done to date.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23906446/ea_board_secondary_3.jpg)

But that approach doesn’t necessarily work when there’s huge “sign uncertainty” — when an intervention has a reasonable chance of making things better or worse.

This is a particularly relevant concern for AI. One of Open Phil’s early investments was a $30 million grant in 2017 to OpenAI, which has since emerged as one of the world’s leading AI labs. It has created the popular GPT-3 language model and DALL-E visual model, both major steps forward for machine learning models. The grant was intended to help by “creating an environment in which people can effectively do technical research on AI safety.” It may have done that — but it also may have simply accelerated the pace of progress toward advanced AI in a way that amplifies the dangers such AI represents. We just don’t know.

Partially for those reasons, I haven’t started giving to AI or longtermist causes just yet. When I donate to buy bed nets, I know for sure that I’m actually helping, not hurting. Our impact on the far future, though, is always less certain, no matter our intentions.

The move to politics

EA’s new wealth has also allowed it vastly more influence in an arena where the movement is bound to gain more attention and make new enemies: politics.

EA has always been about getting the best bang for your buck, and one of the best ways for philanthropists to get what they want has always been through politics. A philanthropist can donate $5 million to start their own school … or they can donate $5 million to lobby for education reforms that mold existing schools more like their ideal. The latter almost certainly will affect more students than the former.

So from at least the mid-2010s, EAs, and particularly EA donors, embraced political change as a lever, and they have some successes to show for it. The late 2010s shift of the Federal Reserve toward caring more about unemployment and less about inflation owes a substantial amount to advocacy from groups like Fed Up and Employ America — groups for which Open Philanthropy was the principal funder.

Tuna and Moskovitz have been major Democratic donors since 2016, when they spent $20 million for the party in an attempt to beat Donald Trump. The two gave even more, nearly $50 million, in 2020, largely through the super-PAC Future Forward. Moskovitz was the group’s dominant donor, but former Google CEO Eric Schmidt, Twitter co-founder Evan Williams, and Bankman-Fried supported it too. The watchdog group OpenSecrets listed Tuna as the 7th biggest donor to outside spending groups involved in the 2020 election — below the likes of the late Sheldon Adelson or Michael Bloomberg, but far above big-name donors like George Soros or Reid Hoffman. Bankman-Fried took 47th place, above the likes of Illinois governor and billionaire J.B. Pritzker and Steven Spielberg.

As in philanthropy, the EA political donor world has focused obsessively on maximizing impact per dollar. David Shor, the famous Democratic pollster, has consulted for Future Forward and similar groups for years; one of my first in-person interactions with him was at an EA Global conference in 2018, where he was trying to understand these people who were suddenly very interested in funding Democratic polling. He told me that Moskovitz’s team was the first he had ever seen who even asked how many votes-per-dollar a given ad buy or field operation would produce.

Bankman-Fried has been, if anything, more enthusiastic about getting into politics than Tuna and Moskovitz. His mother, Stanford Law professor Barbara Fried, helps lead the multi-million dollar Democratic donor group Mind the Gap. The pandemic prevention lobbying effort led by his brother Gabe was one of his first big philanthropic projects. And his super-PAC, Protect Our Future, led by longtime Shor colleague and dedicated EA Michael Sadowsky, has spent big on the 2022 midterms already. That includes $10 million supporting Carrick Flynn, a longtime EA who co-founded the Center for the Governance of AI at Oxford, in his unsuccessful run for Congress in Oregon.

That intervention made perfect sense if you’re immersed in the EA world. Flynn is a true believer; he’s obsessed with issues like AI safety and pandemic prevention. Getting someone like him in Congress would give the body a champion for those causes, which are largely orphaned within the House and Senate right now, and could go far with a member monomaniacally focused on them.

But to Oregon voters, little of it made sense. Willamette Week, the state’s big alt-weekly, published a cover-story exposé portraying the bid as a Bahamas-based crypto baron’s attempt to buy a seat in Congress, presumably to further crypto interests. It didn’t help that Bankman-Fried had made several recent trips to testify before Congress and argue for his preferred model of crypto regulation in the US — or that he prominently appeared at an FTX-sponsored crypto event in the Bahamas with Bill Clinton and Tony Blair, in a flex of his new wealth and influence. Bankman-Fried is lobbying Congress on crypto, he’s bankrolling some guy’s campaign for Congress — and he expects the world to believe that he isn’t doing that to get what he wants on crypto?

It was a big optical blunder, one that threatened to make not just Bankman-Fried but all of EA look like a craven cover for crypto interests. The Flynn campaign was a reminder of just how much of a culture gap remains between EA and the wider world, and in particular the world of politics.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23923887/pencil_line_break.png)

And that gap could widen still more, and become more problematic as longtermism, with all its strangeness, becomes a bigger part of EA. “We should spend more to save people in poor countries from preventable diseases” is an intelligible, if not particularly widely held, position in American politics. “We should be representing the trillions of people who could be living millions of years from now” is not.

Yet for all its intellectual gymnastics, longtermism relies more on politics than near-term causes do. If political action isn’t possible on malaria, charities can and do simply procure bed nets directly. But when it comes to preventing extinction from causes like AI or a pandemic or climate change, the solutions have to be political.

All of which means that EA needs to get a lot better at politics, quite quickly, to achieve its biggest aims. If it fails, or lets its public image be that of just another special interest group, it might squander one of its biggest opportunities to do good.

What EA has meant to me

Things have indeed gotten weird in EA. The EA I know in 2022 is a more powerful and more idiosyncratic entity than the EA I met in 2013. And as it’s grown, it’s faced vocal backlash of a kind that didn’t exist in its early years.

A small clique of philosophy nerds donating their modest incomes doesn’t seem like a big enough deal to spark much outside critique. A multi-billion-dollar complex with designs on influencing the course of American politics and indeed all of world history … is a different matter.

I’ll admit it’s somewhat hard to write dispassionately about this movement. Not because it’s flawless (it has plenty of flaws) or because it’s too small and delicate to deserve the scrutiny (it has billions of dollars behind it). Rather, because it’s profoundly changed my own life, and overwhelmingly for the better.

I encountered effective altruism while I was a journalist covering federal public policy in the US. I lived and breathed Senate committee schedules, think tank reports, polling averages, outrage cycles about whatever Barack Obama or Mitt Romney said most recently. I don’t know if you’ve immersed yourself in American politics like that … but it’s a horrible place to live. Arguments are more often than not made in extreme bad faith. People’s attention was never focused on issues that mattered most to the largest number of people. Progress for actual people in the actual world, when it did happen, was maddeningly slow.

And it was getting worse. I started writing professionally in 2006, when the US was still occupying Iraq and a cataclysmic recession was around the corner. There were already dismal portents for our country’s institutions, but Donald Trump was still just a deranged game show host. One of the dominant parties was not yet attempting full coups with the help of armed mobs of supporters. The Senate’s huge geographic bias was not yet the enormous advantage for Republicans it has become today, rendering the body hugely unrepresentative in a way that offends basic democratic principles. All of that was still to come.

Derek Parfit, one of the key philosophers inspiring effective altruism, once wrote that he used to believe his own personal identity was the key thing that mattered in his life. This view trapped him. “My life seemed like a glass tunnel, through which I was moving faster every year, and at the end of which there was darkness,” he wrote in Reasons and Persons. “When I changed my view, the walls of my glass tunnel disappeared. I now live in the open air. There is still a difference between my life and the lives of other people. But the difference is less. Other people are closer. I am less concerned about the rest of my own life, and more concerned about the lives of others.“

Finding EA was a similarly transformative experience for me. The major point for me was less that this group of people had found, once and for all, the most effective ways to spend money to help people. They didn’t, they won’t, though more than most movements, they will admit that, and cop to those limits to what they can learn about the world.

What was different was that I now had a sense that there was more to the world than the small corner I had dug into in Washington, DC — so much so that I was inspired to co-found this very section of Vox, Future Perfect. This is, in retrospect, an obvious revelation. If I had spent this period as a microbiologist as CRISPR emerged, it would have been obvious that there was more to the world than US politics. If I had spent the period living in India and watching the world’s largest democracy emerge from extreme poverty, it would have been obvious too.

But what’s distinctive about EA is that because its whole purpose is to shine light on important problems and solutions in the world that are being neglected, it’s a very efficient machine for broadening your world. And especially as a journalist, that’s an immensely liberating feeling. The most notable thing about gatherings of EAs is how deeply weird and fascinating they can be, when so much else about this job can be dully predictable.

After you spend a weekend with a group where one person is researching how broadly deployed ultraviolet light could dramatically reduce viral illness; where another person is developing protein-rich foods that could be edible in the event of a nuclear or climate or AI disaster; where a third person is trying to determine if insects are capable of consciousness, it’s hard to go back to another DC panel where people rehearse the same arguments about whether taxes should be higher or lower.

As EA changes and grows, this is the aspect I feel most protective of and most want to preserve: the curious, unusually rigorous and respectful and gracious, and always wonderfully bizarre spirit of inquiry, of going where the arguments lead you and not where it’s necessarily most fashionable to go.

/cdn.vox-cdn.com/uploads/chorus_asset/file/23923976/ea_board_secondary_4b.jpg)

That felt like an escape from American politics to me — and it can be an escape from other rabbit holes for others too. Oh, yeah, and it could, if successful, save many people from disease, many animals from industrial torture, and many future people from ruin.

The Against Malaria Foundation, MacAskill notes, has since its founding “raised $460 million, in large part because of GiveWell’s recommendation. Because of that funding, 400 million people have been protected against malaria for two years each; that’s a third of the population of sub-Saharan Africa. It’s saved on the order of 100,000 lives — the population of a small city. We did that.” That’s a somewhat boisterous statement. It’s also true, and if anything a lower bound on what EA has achieved to date.

My anxieties about EA’s evolution, as it tends toward longtermism and gets more political, are bound up in pride at that achievement, in the intelligent environment that the movement has fostered, and fear that it could all come crashing down. That worry is particularly pronounced when the actions and fortunes of a handful of mega-donors weigh heavily on the whole movement’s future. Small, relatively insular movements can achieve a great deal, but they can also collapse in on themselves if mismanaged.

My attitude toward EA is, of course, heavily personal. But even if you have no interest in the movement or its ideas, you should care about its destiny. It’s changed thousands of lives to date. Yours could be next. And if the movement is careful, it could be for the better.

Author: Dylan Matthews

Read More