Expert surveys and online prediction projects have limitations — but we need them badly.

Every week, Thomas McAndrew, a researcher at the University of Massachusetts Amherst, has been running weekly surveys of experts about the coronavirus pandemic. He sends the survey out to 18 experts in epidemiology and infectious disease modeling and asks them several questions about the course of the pandemic, including how many confirmed cases they think there’ll be by a certain date, and how many deaths we’ll see by the end of 2020.

So far, it has been a useful exercise — mostly for demonstrating that experts aren’t particularly good predictors.

That’s not a knock on experts: Predicting the future is really, really hard. That it is so hard is actually highly consequential. Getting predictions right would be supremely useful to the human race.

When it comes to Covid-19, if the US had a crystal ball, and knew exactly what was ahead, it could better tailor its relief plans and responses. The last few weeks have been so painful and terrifying in part because most people were repeatedly taken by surprise; if the country knew what was coming, even if it was painful, Americans could brace for it.

There’s no crystal ball. But there are people trying to make these predictions anyway. Some people are unusually good at predictions, and aggregating crowd predictions can produce even better ones (more on those below). Similarly, reputation-based prediction markets and betting markets are often pretty good at predictions (except where laws prevent them from having a liquid market).

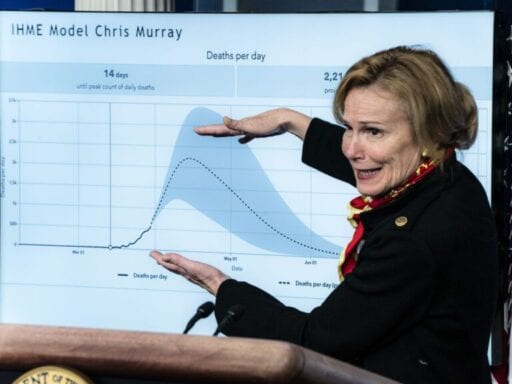

Now, one thing I should make clear is what exactly predictions attempt to do and how they’re distinct from the models being put forward by scientists. One high-profile example of such a model is the Imperial College paper that forecast 40 million deaths worldwide should the world do nothing in the face of the pandemic. Models put forward a range of possibilities given a certain set of assumptions — if we pursue x policy, that could lead to 100,000-200,000 deaths; if we pursue y policy, that could lead to 1 million-plus deaths. That’s what the Imperial College model did: It made an assumption (the world won’t act to stop the pandemic) and it estimated the number of deaths based on that assumption.

A prediction essentially takes the next step. With a prediction, you’re making your best guess about which assumptions will actually play out in reality. You’re basically hazarding a guess that say, is pursuing a specific policy course, and because of that, you estimate there’ll be 100,000-200,000 deaths.

/cdn.vox-cdn.com/uploads/chorus_asset/file/19882753/GettyImages_1216041772.jpg) Win McNamee/Getty Images

Win McNamee/Getty ImagesThe exact course of this pandemic is incredibly difficult to predict. And while forecasters and betting markets do pretty well under ordinary circumstances, they haven’t been tested on a large scale against extraordinary ones like this before.

As someone who cares a lot about how to make predictions we can actually use for policy, I’m watching these sources of coronavirus predictions closely: hoping that one of them, or maybe several of them, will emerge as a good place to look to understand our future.

Panels of experts asked to predict the virus aren’t great at it

McAndrew first started sending his surveys out between February 18-20. Almost immediately, it became clear that predicting the course of the pandemic wouldn’t be easy, even for those who were closely following it.

In a March 16-17 survey, experts estimated that by March 29, there would be about 20,000 cases in the US, and that the true number was 80 percent likely to fall between 10,500 and 81,500 cases. In reality, Johns Hopkins University’s coronavirus tracker reported that there were 142,178 cases on March 29.

Subsequent surveys have shown that the experts do better with shorter time horizons. In the most recent survey, administered March 30-31, respondents estimated that there would be on average 386,500 cases in the US by April 5, with a range of 280,500-581,500 cases. Monday was actually April 6 (the report had a typo), but the Johns Hopkins data for both April 5 (336,384) and April 6 (366,239) weren’t that far off.

But the fact that there’s so much error in predicting just a few weeks into the future makes it hard to have confidence in the longer-term predictions in the survey, like expected deaths in the US this year. (As of the most recent survey, experts estimated 262,500 deaths in the country in 2020.)

That’s frustrating, because McAndrew is asking a lot of questions that I’d really love to have reliable answers to. For example, the survey asks what percentage of current cases in the US have been detected. Are most most sick people being tested, or only a tiny fraction of them? It also asks when hospitalizations will peak in the US, and how to predict the course of the outbreak in New York.

The surveys are especially notable because of who’s participating. “Participants are modeling experts and researchers who have spent a substantial amount of time in their professional career designing, building, and/or interpreting models to explain and understand infectious disease dynamics and/or the associated policy implications in human populations,” McAndrew wrote in his March 18 survey report.

But that hasn’t been enough for them to consistently predict the spread of the virus. Indeed, expertise may not necessarily be an asset. “Being an expert is not necessarily helpful,” Carnegie Mellon researcher Roni Rosenfeld told my colleague Sigal Samuel. “What is helpful is paying attention to detail and being very conscientious about it.” That’s a view shared by other predictions researchers, too.

Why is it so hard to predict the spread and impact of the coronavirus? Well, it requires getting so many different, difficult predictions right. First, you have to estimate some features of the virus — how contagious is it? How much transmission is from close contact and how much is from contaminated surfaces or brief interactions? How much transmission is from people who are asymptomatic? How much does wider use of face masks help?

Next, you have to estimate large-scale political and economic questions that are without precedent. How strongly will governments respond? Will they lock down, and when? Will the lockdowns be sustained for as long as it takes to quash the virus? When they’re lifted, will there be a resurgence, and how will a population wearied by months of lockdowns respond to the resurgence?

And then there are scientific questions: Will a vaccine be developed? What about a good course of treatment with drugs?

That’s a very long list of questions to predict.

Can individual non-experts predict the course of the pandemic?

McAndrew’s survey isn’t the only exercise of its kind. There’s a whole world of forecasting out there fixated on a particular question: How can we get better at predicting the future? And that world has turned its attention to coronavirus.

Philip Tetlock is a psychologist at the University of Pennsylvania who studies how to get better at predicting things. In a 2005 book called Expert Political Judgment, he published the results from asking experts questions about current events and political and economic trends. The experts did really poorly: Their performance was worse than random guessing, and knowing more about geopolitics didn’t make them better at predicting it (in fact, it made them worse).

Tetlock then tested a large cohort of smart non-experts on a series of current events questions in an extensive research effort called the Good Judgment Project. Some of them performed much, much better than others. Tetlock called the top 2 percent “superforecasters”. They weren’t domain experts, but they did remarkably well at predicting future events across many different fields — much better than the experts typically did.

The Good Judgment Project has continued to this day, with an open predictions platform where anyone can weigh in on current events. Most of us aren’t superforecasters, so you might expect that the open platform would perform much worse than Tetlock’s original highly selected superforecasters — but on the right sort of questions, the prediction platform does quite well.

Their coronavirus outbreak page shows how their predictions have been holding up so far. In early February, for example, the forecasters collectively correctly predicted that between five and 11 states would have confirmed cases by the end of February, and that California would hit 25 confirmed cases in March.

They anticipated that as of mid-February the US was likely in for the long haul, that the public health emergency declaration from the World Health Organization would remain in place through May, and that the CDC’s travel warning about China would persist through June.

There are 27 questions now open, many of them with dozens of predictions registered. More questions, some with more than a thousand forecasts, have already been resolved. The most-answered question, posted in late January, asks, “How many total cases of Wuhan coronavirus (2019-nCoV) will have been reported by the World Health Organization (WHO) as of 20 March 2020?”

By early February, most forecasters on the site were guessing between 100,000 and 200,000. On March 17, it surpassed 200,000 cases. So they were wrong, technically — but they were a lot closer than most people were in early February.

This reasonably good track record is enough to make the Good Judgment Project site oddly compelling; I flipped through it for hours, looking for answers about how many people could die by this summer, when the NBA will next play a game (not any time soon, most predictors think), and whether the S&P 500 will recover by this summer (predictors are split; predicting the market is much harder than predicting current events, because lots of people are already predicting the market by betting money on it).

Another similarly compelling prediction site is Metaculus. The setup is similar: Anyone can log in and add their own judgment to the fray. Their weekly predictions of new case numbers are consistently closer to the mark than the results from expert surveys, though the two groups have asked different questions, which makes direct cross-comparisons impossible.

Since anyone can submit a question, the questions range from the mission-critical — when will a vaccine be widely available — to the morbid — will all major US presidential candidates survive? Reading through them, I want to believe that this prediction strategy works: that we’ve found a way to aggregate our expertise and cast some clarity on our situation. But for most of the predictions it’s too early to tell.

Should sites like this one feature more prominently in our planning and response? I think so. Even if they’re subject to many limitations, as long as they’re subject to different limitations than experts or the pandemic models that have gotten widespread attention, they can be a valuable addition to our overall state of knowledge.

They are also a good idea to participate in individually. The coronavirus pandemic is fast-moving, and predictions about what will happen next are falsified repeatedly, often within a matter of days. Registering a prediction helps improve aggregate prediction accuracy, through the power of crowds; it also helps increase awareness of the strengths and limitations of our own predictive ability.

Tetlock noticed that there were systematic differences between his superforecasters and everyone else, but those differences weren’t magic, and were in fact quite straightforward. Superforecasters made predictions more often. They made predictions specific enough they’d notice when they were falsified. They changed their mind frequently, a little at a time, instead of in dramatic swings. Registering forecasts is a great way to practice all of those things, and to become someone who is taken a little bit less by surprise.

Sign up for the Future Perfect newsletter and we’ll send you a roundup of ideas and solutions for tackling the world’s biggest challenges — and how to get better at doing good.

Future Perfect is funded in part by individual contributions, grants, and sponsorships. Learn more here.

Author: Kelsey Piper

Read More