Is DC doomed to make the same mistakes with AI that it made with social media?

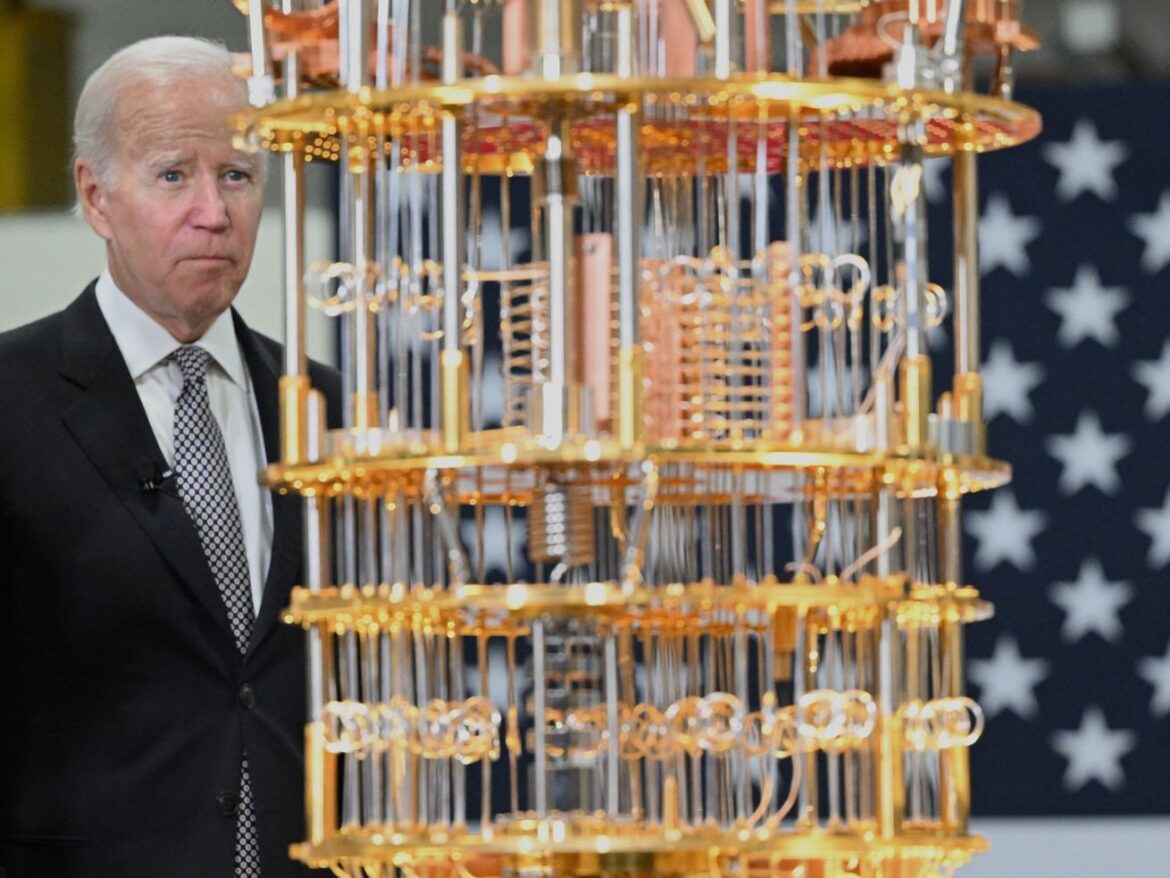

Joe Biden has used ChatGPT, and he’s reportedly “fascinated” by it. That makes him a lot like most of us, who have marveled over generative AI’s ability to create words, images, audio, and video from simple prompts, since the technology became widely available to the general public in 2022. But Biden is also different from everyone because he’s the president of the United States, with the authority and the responsibility for making sure that technological advances are also safe.

His administration is now trying to figure out how to do that. Congress might be, too. Europe definitely is, and at a comparatively rapid pace. While the US government wants to at least appear to be considering and mitigating any potential harms from generative AI, how it will do that remains to be seen. Especially given Washington’s spotty track record when it comes to reining in Big Tech.

The latest entrant to the generative AI arms race is Google, which has lagged behind rival Microsoft with its generative AI-powered chatbot and search. Google announced on Wednesday that it would be integrating its AI offerings into just about all of its major products, including Google Docs. That includes Google Search, the tool that most of America uses all the time to navigate the internet.

AI isn’t new, and neither are attempts to regulate it. But generative AI is a big leap forward, and so are the problems or dangers it could unleash on a world that isn’t ready for it. Those include disinformation spread by convincing deepfakes and misinformation spread by chatbots that “hallucinate,” or make up facts and information. Inherent biases could cause people to be discriminated against. Millions of people might suddenly be put out of work, while intellectual property and privacy rights are bound to be threatened by the technology’s appetite for data to train on. And the computing power needed to support AI technology makes it prohibitively expensive to develop and run, leaving all but a few rich and powerful companies to rule the market.

We’ve been largely relying on these big technology companies to regulate themselves. That strategy hasn’t worked so well in the past because businesses only voluntarily play things safe when it’s in their own best interests to do so. But the government has a chance to get ahead of things now, rather than try to deal with problems after they come up and are far harder to solve. The government is also historically slow or unable to take on tech companies, between administrations with limited powers, courts that tend to rule in businesses’ best interests, and a Congress that can’t seem to pass any laws.

“Congress is behind the eight ball. If our incapacity was a problem with traditional internet platforms like Facebook, the issue is ten times as urgent with AI,” Sen. Michael Bennet (D-CO) told Vox.

With Google, Microsoft, and a host of other tech companies now rushing their AI services out to as many of their products as possible, it might be even more urgent than that.

The White House can move faster than Congress — and it is

The Biden administration has recently stepped up its ongoing efforts to ensure that AI is responsible and safe. On May 4, after several members of the Biden administration met with the CEOs of companies on the forefront of generative AI — Google, Anthropic, Microsoft, and OpenAI — the White House announced several actions meant to “protect people’s rights and safety” while promoting “responsible AI innovation.” Those include funding for several new research institutes, a “public evaluation” of some existing generative AI systems at DEF CON, a security conference in Las Vegas, this August, and having the Office of Management and Budget provide policy guidance for how the federal government uses AI systems.

This builds on the administration’s previous actions, which have ramped up alongside generative AI. Last fall’s AI Bill of Rights established a set of protections Americans should have from AI systems. According to the document, the public is entitled to safe and effective systems; it deserves protections from algorithmic discrimination and data privacy invasions; and it should be told when AI is being used and given the ability to opt out. And while these sound all well and good, they’re just recommendations, to which compliance is voluntary.

To a certain extent, federal agencies are already empowered to create regulations that may apply to generative AI products and enforce consequences for companies that violate them. The Biden administration has directed agencies to protect Americans from harmful AI according to their own purview. For example, the Equal Employment Opportunity Commission tackles issues related to employment and discrimination, and the Copyright Office and the Patent and Trademark Office are looking into if and how to apply their intellectual property rules to generative AI.

The National Telecommunications and Information Administration is currently asking for comments on accountability policies that would ensure AI tools are “legal, effective, ethical, safe, and otherwise trustworthy.” These, the agency says, will include “adequate internal or external enforcement to provide for accountability.”

And there are things agencies already have the power to do. The heads of the EEOC, Consumer Financial Protection Bureau, Department of Justice’s Civil Rights Division, and Federal Trade Commission recently issued a joint statement declaring that they have the authority to regulate AI within their respective spaces and that they will enforce those rules when needed. The AI Now Institute, which is run by two former AI advisers to the FTC, recently put out a report that, among other things, made the case for how competition policy can be used to keep AI companies in check.

“This flavor of artificial intelligence that involves massive amounts of data and massive amounts of computational power to process it has structural dependencies on resources that only a few firms have access to,” said Sarah Myers West, managing director of AI Now Institute. “Competition law is central to regulating generative AI and AI more broadly.”

/cdn.vox-cdn.com/uploads/chorus_asset/file/24648628/GettyImages_1249642645.jpg)

Al Drago/Bloomberg via Getty Images

West noted that Microsoft has already done a few questionable things to disadvantage competitors, like reportedly forbidding companies from using its Bing search index to power their own chatbots. That’s the kind of thing the FTC or the antitrust arm of the Department of Justice could potentially act on.

Meanwhile, FTC chair Lina Khan has indicated that she is ready and willing to use the agency’s competition and consumer protection mandates to keep AI companies in check. She recently wrote a New York Times op-ed saying that the consequences of unchecked growth of Web 2.0 should serve as an impetus for agencies to act now on AI. The FTC, she said, will be vigilant.

“Although these tools are novel, they are not exempt from existing rules, and the FTC will vigorously enforce the laws we are charged with administering, even in this new market,” Khan wrote.

The problem with the administration’s efforts here is that administrations change, and the next one may not have the same vision for AI regulations. The Trump administration, which predated the meteoric rise of generative AI, was open to some agency oversight, but it also didn’t want those agencies to “needlessly hamper AI innovation and growth” — especially not in the face of China’s 2017 vow to become the world leader by 2030. If Republicans win the White House in 2024, we’ll likely see a more hands-off, business-friendly approach to AI regulations. And even if not, agencies are still limited by what laws give them the authority to oversee.

The self-regulating option that always fails

Many federal lawmakers have learned that Big Tech and social media companies can operate recklessly when guardrails are self-imposed. But those lessons haven’t resulted in much by way of actual laws, even after the consequences of not having them became obvious and even when both parties say they want them.

Before generative AI was widely released, AI was still a concern for the government. There were fears, for example, about algorithmic accountability and facial recognition. The House has had an AI Caucus since 2017, and the Senate’s respective caucus dates back to 2019. Federal, state, and even local governments have been considering those aspects for years. Illinois has had a facial recognition law on its books since 2008, which says businesses must get users’ permission to collect their biometric information. Meta is one of several companies that has run afoul of this law over the years, and had to pay big fines as a result.

There’s plenty of reason to believe that tech companies won’t adequately regulate themselves when it comes to AI. While they often make it a point to say that they value safety, want to develop responsible AI platforms, and employ responsible AI teams, those concerns are secondary to their business interests. Responsible AI researchers risk getting fired if they speak out negatively about the products they’re investigating, or laid off if their employer needs to reduce its headcount. Google held off on releasing its AI systems to the public for years, fearing that it hadn’t yet considered all of the problems those could cause. But when Microsoft and OpenAI made their offerings widely available early this year, Google aggressively accelerated the development and release of its AI products to compete. Its chatbot, Bard, came out six weeks after Microsoft’s.

On the same day that Biden met with several AI CEOs in May, Microsoft announced it was expanding its new Bing and Edge, which incorporate OpenAI’s tools. They’re now more widely available and have a few new features. And while Microsoft says it’s committed to a “responsible approach” to AI, Bing’s chatbot still has issues with hallucinating. Microsoft is forging ahead and monetizing the results of its investment in OpenAI, waving off concerns by saying that Bing is still in preview and mistakes are bound to happen.

A week after that, Google made its announcement about integrating AI into pretty much everything, making sure to highlight some of the tools it has developed to ensure that Google’s AI products are responsible (according to Google’s parameters).

Congress has yet to come together on a plan for AI

Finally, Congress can always pass laws that would directly address generative AI. It’s far from certain that it will. Few bills that deal with online dangers — from privacy to facial recognition to AI — get passed. Big Tech-specific antitrust bills largely languished last Congress. But several members of Congress have been outspoken about the need for or desire to create laws to deal with AI. Rep. Ted Lieu (D-CA) asked ChatGPT to write a bill that directs Congress to “focus on AI” to ensure that it’s a safe and ethical technology. He has also written that AI “freaks me out.”

Bennet, the Colorado senator, recently introduced the Assuring Safe, Secure, Ethical, and Stable Systems for AI (ASSESS) Act, which would ensure that the federal government uses AI in an ethical way.

“As the government begins to use AI, we need to make sure we do so in a way that’s consistent with our civil liberties, civil rights, and privacy rights,” Bennet said.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24648651/GettyImages_1253654037.jpg)

Josh Edelson/AFP via Getty Images

Bennet would also like to see a new agency dedicated to regulating Big Tech, including its use of generative AI. He introduced a bill last session that would create such an agency, the Digital Platform Commission Act. But getting Congress to go for standing up a new agency is a tall order when so many lawmakers bristle at the idea of the existing agencies exerting the powers they already have.

Congress has a well-deserved reputation for being very much behind the times, both in understanding what new technologies are and in passing legislation that deals with new and unique harms they may cause. They’ve also been reluctant to make laws that could inhibit these companies’ growth or give any kind of advantage to another country, especially to China (which, by the way, is developing its own AI regulations).

Daren Orzechowski, a lawyer who often represents tech companies, said it’s important for regulations not to be so heavy-handed that they stop companies from developing technologies that may well improve society.

“The more prudent approach might be to set some guardrails and some guidelines versus being overly restrictive,” he said. “Because if we are overly restrictive, we may lose out on some really good innovation that can help in a lot of ways.”

That said, it does at least appear that some legislation will be coming out of Congress in the coming months. Sen. Chuck Schumer (D-NY) announced in April that he has created a “framework” for laws that deal with AI. While his position as Senate majority leader means he has control over which bills get a floor vote, the Democrats don’t have the majority needed to pass them without at least some Republicans jumping on board. And getting it past the Republican-controlled House is another story altogether. It’s also not known how much support Schumer’s plan will get within his own party; reportedly, very few members of Congress knew about this plan at all.

“Look at how privacy laws have progressed on the federal level,” Orzechowski said. “I think that’s probably an indicator that it’s not likely something will be passed to touch on this area when they haven’t been able to get something passed with respect to privacy.”

Real guardrails for generative AI may not come from the US at all. The European Union appears to have taken the lead on regulating the technology. The AI Act, which has been in the works for years, would classify AI technology by risk levels, with corresponding rules according to those risks. The European Commission recently added a new section for generative AI, including copyright rules. ChatGPT was also temporarily banned in Italy over possible breaches of the EU’s General Data Protection Regulation, illustrating how some of the EU’s existing regulations for online services can be applied to new technologies.

It all just shows how other countries are already willing and able to deal with AI’s potential for harm, while the US scrambles to figure out what, if anything, it will do.